The future of AI is no longer a Cloud-based paradigm; it is being defined by a fundamental move away from centralised data centres to a distributed network. This is a direct response to a critical challenge: Cloud-based AI, with its inherent latency, cannot meet the demand for instantaneous, real-time decision-making in mission-critical applications.

The AI inference market, now a front line of this contest, is projected to grow from an estimated €113.47 billion in 2025 to over €253 billion by 2030, a clear indicator of the imperative to bring intelligence closer to the source.

The primary hurdle to this transition is the ‘inference bottleneck’, as Edge devices typically lack the computational power and memory capacity required for the many floating-point matrix operations that define Large Language Models (LLMs). The industry is answering this with a multi-pronged approach, moving from general-purpose silicon to purpose-built, hyper-efficient architectures that are fundamentally suited to the demands of the Edge.

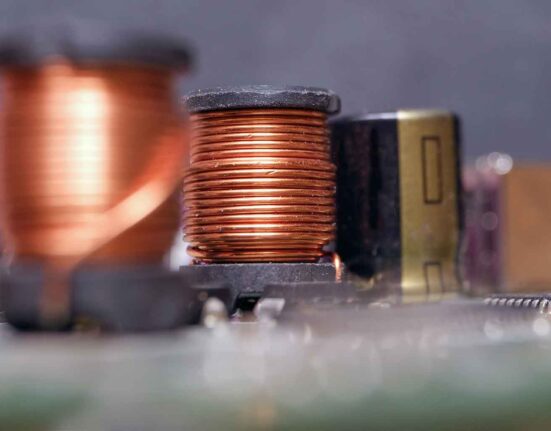

Hyper-efficient silicon and AI accelerators

A new generation of AI accelerators is emerging, designed to deliver high performance at a fraction of the power consumption of traditional solutions. Axelera AI’s Metis AI Processing Unit (AIPU) is a prime example of this, pioneering a unique in-memory computing architecture. By processing data where it is stored, this architecture minimises data movement, dramatically reducing latency and energy usage. The Metis AIPU delivers 214 TOPS, a significant level of performance for devices like smart cameras and drones operating in tightly enclosed or battery-powered environments. This approach allows developers to integrate powerful AI without the usual trade-offs in thermal management and battery life.

This same need for power and reliability is being met by more powerful embedded computing platforms. Innodisk’s Apex Series is engineered for intense workloads, including Machine Learning, Hyper-Converged Infrastructure (HCI), and complex LLM applications. These systems deliver extreme performance and ultra-low latency, and are backed by a comprehensive ecosystem of industrial-grade SSDs and DRAM modules. For example, the APEX-X100 uses NVIDIA RTX 6000 Ada accelerators, making it suitable for high-precision medical imaging and High-Performance Computing (HPC) applications. Its durable design, which includes wide temperature support and resistance to vibration, ensures these solutions are reliable enough for deployment in harsh industrial environments and smart city infrastructure.

Mission-proven hardware for critical domains

The imperative for power and reliability extends into the most challenging of domains: mission-critical environments. For defence, aerospace, and space, standard embedded computing is not enough. Aitech Systems, a long-established developer of sturdy embedded systems, builds rugged GPGPU-based AI supercomputers on NVIDIA’s Orin architecture. The space-qualified S-A2300, for instance, is designed for Low Earth Orbit (LEO) missions, delivering the computational power necessary for autonomous satellite operations. In the air, their A178-AV system integrates with avionic platforms to enhance navigation and pilot situational awareness. These systems are engineered to withstand extreme temperature fluctuations, shock, and vibration, ensuring operational integrity in the most challenging environments.

Streamlining development for autonomy

The challenge of bringing AI to the Edge is not solely a hardware problem; it is also a question of development. The process of transitioning AI models to embedded systems has been complex and prone to vendor lock-in. A new focus on open, vendor-agnostic platforms is beginning to streamline this process, making powerful AI development accessible to a wider range of engineers and businesses.

The Alp Lab Edge-1 AI Module (E1M) embodies this principle. This unified, plug-and-play module features a standardised pinout and a single software stack (the Alp SDK) that eliminates traditional vendor dependency. Developers can move between different processor architectures – from Alif Semi to Renesas and Qualcomm – using the same hardware design and user code. This approach greatly reduces development time and costs, liberating engineers from vendor lock-in and allowing them to focus on innovation.

The Innodisk Apex Series is a good example of this, as it is based on a modular architecture that allows for extensive customisation. The series offers a comprehensive ecosystem of industrial-grade SSDs and DRAM modules, ensuring a cohesive and integrated solution for complex industrial deployments and smart city infrastructure.

The evolution continues with microcontrollers that deliver Generative AI in power-constrained environments. Alif Semiconductor’s Ensemble GenAI products are purpose-built with an AI-ready architecture, featuring the Arm Ethos-U85 NPU, which supports transformer-based ML networks. As an example of its efficiency, an E4 device draws only 36mW when generating text, showcasing the power efficiency needed for applications in robotics and smart city equipment.

The future of AI is not a single, centralised entity, but a distributed, intelligent network. Speak to Astute Group – the more intelligent choice.